Configuration management using profiles

TLDR

Configuration management becomes challenging as applications grow. This post documents a more scalable profile-based approach for managing settings across development, testing and production environments. It uses principles of centralization and secrets management and drive consistency in multi-stage software environments.

Problem Statement: Failing at Scale with .env

Simple .env files work well for small projects but create problems as systems grow:

- Version Control: Teams struggle to track the latest configuration versions

- Security Risk: Secrets spread across multiple files

- Environment Confusion: Code becomes full of environment checks

These issues slow down development and create security risks. Developers end up juggling multiple .env files, increasing the risk of accidental commits or hardcoding ad-hoc settings leads to inconsistent environments and hard-to-trace bugs during integration.

If not addressed systemically, it leads to engineering anti-patterns such as:

.envProliferation: A single.envfile becomes unmanageable as services/modules multiply. Developers end up juggling multiple files, risking leaks (e.g., accidental commits) or misconfiguration.- Collaboration Overhead: Sharing

.envfiles across teams ("env pingpong") creates friction and security risks, especially with API keys or credentials. - Fragmented State Awareness: Programs lack a unified way to detect their environment (dev vs. prod), leading to brittle, hardcoded conditionals.

Concept: Configuration Profiles

The approach outlined here uses a ConfigManager that relies on a config profiles. Instead of managing dozens of .env variables, we’ll implement a system where the profile itself (dev, test, prod) determines how configurations are loaded.

Some example profiles:

- Development Focuses on speed. Here, hardcoded values and simple settings (like SQLite databases or mock APIs) let you iterate quickly.

- Production Prioritizes security by fetching secrets from secure sources, ensuring that sensitive information never hardcodes in the source code.

- Experimental Offers isolated and ephemeral configurations to facilitate accurate testing without affecting live data.

Using profiles, all components (database, API, workers) derive their settings from the same profile. No more "DB thinks it’s dev but API thinks it’s prod" bugs.

Sidebar: What are we configuring?

This profile-based configuration strategy centralizes variable management and simplifies the transition between stages in the software delivery pipeline. Loosely, it will need to handle there are 2 main kinds of configurations:

- Infrastructural Configurations

- Domain Configurations

Infrastructure configurations are needed at application startup and inform how the application's infrastructure behaves. For example:

@dataclass

class DatabaseConfig(BaseConfig):

"""Infrastructure configuration example"""

ENV_USER: ClassVar[str] = "POSTGRES_USER"

user: str

pool_size: int = 5

@classmethod

def load_production_config(cls) -> "DatabaseConfig":

return cls(

user=os.environ[cls.ENV_USER],

pool_size=int(os.getenv("POOL_SIZE", "10"))

)

# ...

def create_app() -> FastAPI:

config = ConfigManager.load_profile()

# Infrastructure initialization

db = Database(config.database)

cache = Redis(config.cache)

# ...

Domain Configurations are slightly different. These affect how your business logic behaves in different environments. For example:

@dataclass

class SimulationConfig(BaseConfig):

"""Domain configuration example"""

iterations: int

tolerance: float

@classmethod

def load_development_config(cls) -> "SimulationConfig":

return cls(

iterations=100, # Faster for development

tolerance=1e-3 # Less precise for faster results

)

# ...

class SimulationEngine:

def __init__(self, config: SimulationConfig):

self.config = config

def run(self, model: Model) -> Results:

for i in range(self.config.iterations):

# ...

In general, Infrastructure configs are irreversible (can’t change DB mid-flight) → Must be rigorously validated at startup. Domain configurations in contrast is reversible (can adjust simulation params per request) → Allows experimentation.

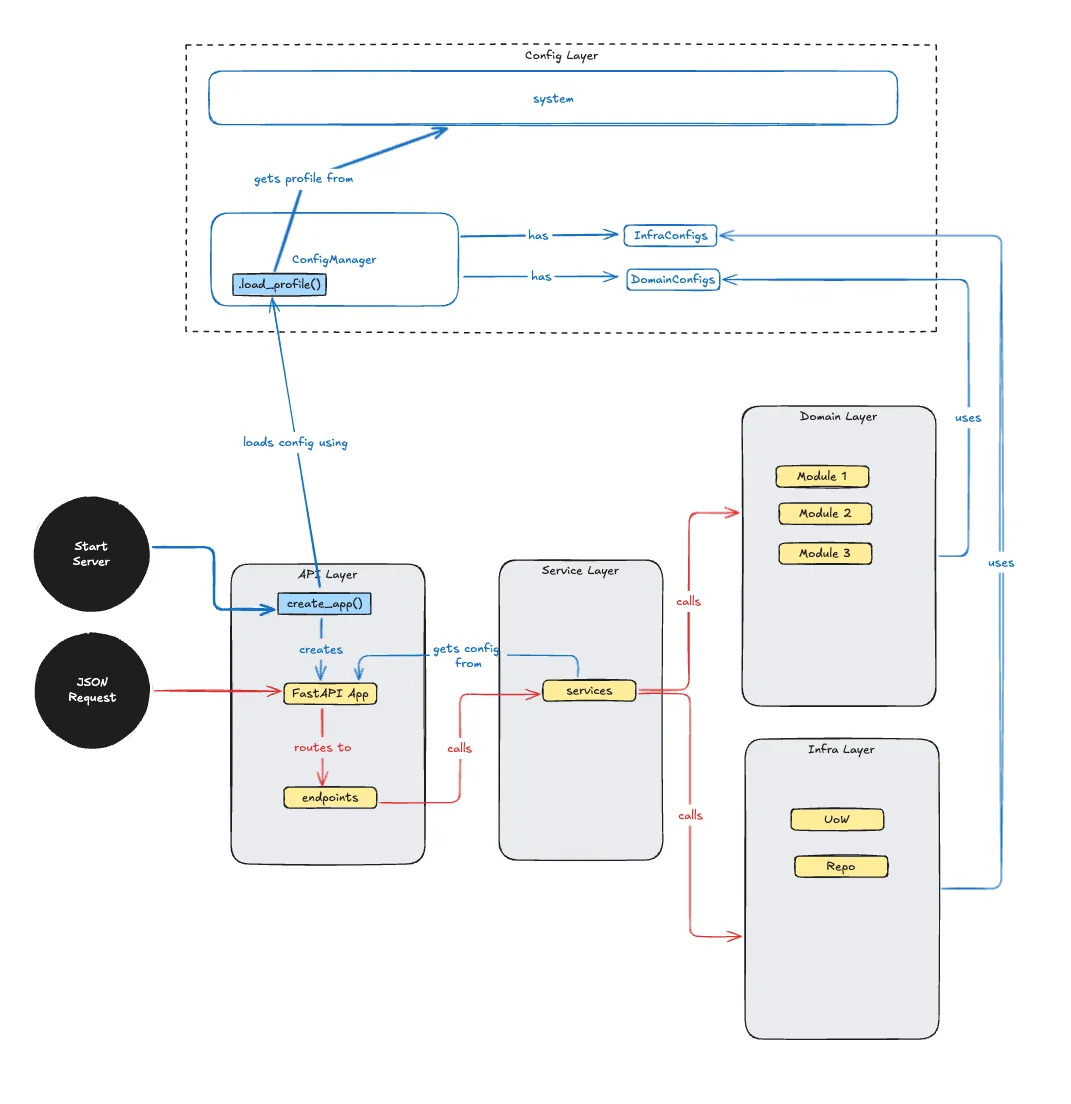

Implementation: A Layered Approach

Putting this together, we are going to stick with the layered architecture. The implementation uses three main layers:

Configuration Layer (

ConfigManager)- Loads the right profile (dev/test/prod)

- Coordinates all module configurations

API Layer (

create_app())- Uses configuration to set up FastAPI

- Initializes services with correct settings

Domain Layer (Individual Modules)

- Each module has its own config class

- Can be tested independently

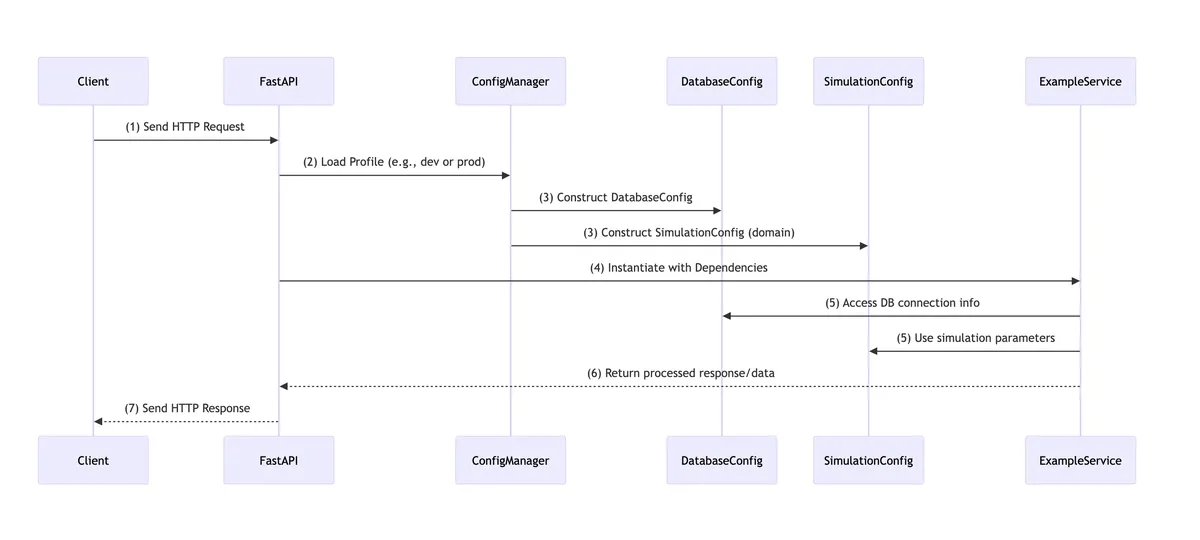

Execution Flow Overview

When the app is launched, here is a trace of the steps:

- Start of the server: Initiates the application and the request-response cycle.

- Configuration loading: Profiles are loaded and settings are applied based on the determined environment.

- API request handling: JSON requests are accepted, routed to the appropriate service, and processed by the domain modules, with infrastructure support provided as needed.

Implementation Sample: The Configuration Manager

The core idea is simple - each module defines how it should behave in different environments. Here's an example:

@dataclass

class DatabaseConfig(BaseConfig):

# Map environment variables to settings

ENV_USER: ClassVar[str] = "POSTGRES_USER"

ENV_PASSWORD: ClassVar[str] = "POSTGRES_PASSWORD"

# Runtime settings

user: str

password: str

pool_size: int = 5

@classmethod

def load_development_config(cls):

"""Fast local development settings"""

return cls(

user="dev_user",

password="dev_pass"

)

@classmethod

def load_production_config(cls):

"""Secure production settings"""

return cls(

user=os.environ[cls.ENV_USER],

password=os.environ[cls.ENV_PASSWORD]

)

The ConfigManager then discovers and loads all these configs:

class ConfigManager:

@classmethod

def load_profile(cls, profile="development"):

"""Load all module configs for a profile"""

configs = {}

# Find all config classes

for ConfigClass in BaseConfig.__subclasses__():

# Load the right profile method

method = f"load_{profile}_config"

configs[ConfigClass.name] = getattr(ConfigClass, method)()

return configs

Using Configurations in FastAPI

Generally, we use FastAPI's Depends, Requests and .state to manage the implementation.

The configuration system integrates with FastAPI in three places:

- Application Setup

def create_app():

# Load configurations

config = ConfigManager.load_profile()

app = FastAPI(

debug=config.api.debug,

title=config.api.title

)

# Store config for later use

app.state.config = config

return app

- Dependency Injection

def get_config(request: Request) -> Config:

"""FastAPI dependency to access config"""

return request.app.state.config

@router.get("/items")

def list_items(config: Config = Depends(get_config)):

# Use configuration settings

return db.query(limit=config.api.page_size)

- Service Layer

class ItemService:

def __init__(self, config: Config):

self.page_size = config.api.page_size

self.cache_ttl = config.cache.ttl

Production Setup with GitHub Secrets

Instead of sharing .env files, we use GitHub Secrets for production. Here's a sample:

GitHub Actions Workflow

name: Deploy Application

on:

push:

branches: [ main ]

jobs:

deploy:

runs-on: ubuntu-latest

environment: production

steps:

- uses: actions/checkout@v2

- name: Configure Environment

env:

POSTGRES_USER: ${{ secrets.POSTGRES_USER }}

POSTGRES_PASSWORD: ${{ secrets.POSTGRES_PASSWORD }}

POSTGRES_HOST: ${{ secrets.POSTGRES_HOST }}

PROFILE: production

run: |

python validate_config.py

Environment Template

And an environment template can be created (e.g. .env.template) to document required variables:

# Database Settings

POSTGRES_USER=<required>

POSTGRES_PASSWORD=<required>

POSTGRES_HOST=localhost

POSTGRES_PORT=5432

POSTGRES_DB=myapp

# API Settings

FASTAPI_CORS_ORIGINS=http://localhost:3000

PROFILE=development

Future Ideas & Improvements

Thats as far as I am for this. Rounding this up, this profile-based system addresses fragmentation by driving configuration from a single source of truth—the chosen “profile” (e.g., dev, test, prod), giving some positive benefits:

- Centralization – All infrastructure (database, cache) and domain (simulation, business logic) settings come from the same profile.

- Scalability – Adding a new module (e.g., a queueing system) is as simple as defining a new config class.

- Security – Production secrets live securely in GitHub Secrets, Vault, or any other secret manager, never in code.

Noting some ideas for future development:

- Stricter Environment Validation – Check for missing or malformed env variables at startup.

- Deeper Vault Integrations – Source secrets from a centralized vault or secret manager.

- CI/CD Profiling – Dynamically switch to ephemeral test configs in PR environments.

Appendix: Deep Dives

Configuration Manager Implementation

I'll dig a bit deeper into the infrastructure implementation, because thats really the core of this. Each Module will have its own config class. A Config class has a few simple parts:

- Environment Variable Mapping Class vars pointing to environment variable names.

@dataclass

class DatabaseConfig(BaseConfig):

# Map env vars to settings

ENV_USER: ClassVar[str] = "POSTGRES_USER"

ENV_PASSWORD: ClassVar[str] = "POSTGRES_PASSWORD"

- Runtime Settings The actual fields (e.g., user, password).

# Runtime configuration

user: str

password: str

pool_size: int = 5

- Profile Methods Load dev, production, or experimental settings.

@classmethod

def load_development_config(cls):

return cls(

user="dev_user",

password="dev_pass"

)

- Helper Methods For tasks like assembling DB connection strings or initializing services at server start.

def get_connection_url(self) -> str:

return f"postgresql://{self.user}:{self.password}@{self.host}"

Here is a sample implementation in full:

@dataclass

class DatabaseConfig(BaseConfig):

# [1]

# Environment variable names

ENV_USER: ClassVar[str] = "POSTGRES_USER"

ENV_PASSWORD: ClassVar[str] = "POSTGRES_PASSWORD"

ENV_HOST: ClassVar[str] = "POSTGRES_HOST"

ENV_PORT: ClassVar[str] = "POSTGRES_PORT"

ENV_DATABASE: ClassVar[str] = "POSTGRES_DB"

ENV_POOL_SIZE: ClassVar[str] = "POSTGRES_POOL_SIZE"

ENV_MAX_OVERFLOW: ClassVar[str] = "POSTGRES_MAX_OVERFLOW"

# [2]

# Settings

user: str

password: str

host: str

port: int

database: str

pool_size: int = 5

max_overflow: int = 10

# [3]

@classmethod

def load_development_config(cls) -> "DatabaseConfig":

return cls(

user="alephuser",

password="alephpassword",

host="localhost",

port=5432,

database="alephdb",

)

@classmethod

def load_production_config(cls) -> "DatabaseConfig":

return cls(

user=os.environ[cls.ENV_USER],

password=os.environ[cls.ENV_PASSWORD],

host=os.environ[cls.ENV_HOST],

port=int(os.environ[cls.ENV_PORT]),

database=os.environ[cls.ENV_DATABASE],

pool_size=int(os.getenv(cls.ENV_POOL_SIZE, "10")),

max_overflow=int(os.getenv(cls.ENV_MAX_OVERFLOW, "20")),

)

# [4]

def get_postgre_uri(self) -> str:

"""Get the PostgreSQL connection URI"""

return f"postgresql://{self.user}:{self.password}@{self.host}:{self.port}/{self.database}"

The ConfigManager discovers and loads these configurations, thanks to the use of ABC and ___subclassess__():

class ConfigManager:

"""Manages configuration profiles."""

@staticmethod

def _get_config_classes() -> Dict[str, Type[BaseConfig]]:

"""Helper to dynamically load all configuration classes"""

configs: Dict[str, Type[BaseConfig]] = {}

for config_class in BaseConfig.__subclasses__():

name = config_class.__name__.replace("Config", "")

key = "".join(["_" + c.lower() if c.isupper() else c for c in name]).lstrip(

"_"

)

configs[key] = config_class

return configs

def __init__(self, load_method: str) -> None:

"""Initialize profile with specified loading method"""

self._load_configs(load_method)

def _load_configs(self, load_method: str) -> None:

"""Load all configs using specified method"""

for name, config_class in self._get_config_classes().items():

if not hasattr(config_class, load_method):

raise ValueError(

f"Config class {config_class.__name__} missing required "

f"method: {load_method}"

)

method = getattr(config_class, load_method)

setattr(self, name, method())

@classmethod

def load_profile(cls, profile: Optional[str] = None) -> ConfigManager:

"""Load configuration profile"""

env = profile or os.getenv("PROFILE", "development")

method_map = {

"development": "load_development_config",

"production": "load_production_config",

"experimental": "load_experimental_config",

}

if env not in method_map:

raise ValueError(

f"Invalid profile: {env}. Must be one of: "

f"{', '.join(method_map.keys())}"

)

return cls(method_map[env])

Server and Services Refactor

Following the Single Entry Point principle, create_app() is where environment detection, configuration loading, and service initialization occur. Everyone in the app shares these same stateful settings.

Sample: Server Creation

def create_app() -> FastAPI:

"""Application factory with configuration and dependencies"""

# Load configuration

config = ConfigManager.load_profile()

# Create FastAPI app with config

app = FastAPI(

title="API",

description="API",

version="1.0.0",

debug=config.prefect.debug

)

# Configure CORS from config

app.add_middleware(

CORSMiddleware,

allow_origins=config.fastapi.cors_origins,

allow_credentials=True,

allow_methods=["*"],

allow_headers=config.fastapi.cors_headers or ["*"]

)

# Initialize core services

uow = UOWAtlas(config.database.get_postgre_uri())

# Store in app state

app.state.config = config

app.state.uow = uow

# Include routers with dependencies

app.include_router(

config_page_router,

prefix=config.prefect.api_prefix,

tags=["configurations"]

)

app.include_router(

preset_page_router,

prefix=config.prefect.api_prefix,

tags=["presets"]

)

return app

Sample: Routers, using Depends

router = APIRouter()

def get_uow(request: Request) -> UOWAtlas:

return request.app.state.uow

def get_service(uow: UOWAtlas = Depends(get_uow)) -> PlantConfigurationService:

return PlantConfigurationService(uow)

def get_config(request: Request) -> ConfigManager:

return request.app.state.config

@router.get("/configurations/{name}")

async def get_configuration(

name: str,

user_id: UUID = Depends(get_current_user),

service: PlantConfigurationService = Depends(get_service)

) -> PlantConfiguration:

"""Thin controller that delegates to service"""

return service.get_plant_configuration(name, user_id)

Sample: Services, using config provisioned:

class PlantConfigurationService:

"""Service layer for plant configuration management"""

def __init__(self, uow: UOWAtlas):

self._uow = uow

def get_plant_configuration(self, config_name: str, user_id: UUID) -> PlantConfiguration:

"""Get existing plant configuration"""

with self._uow:

model = self._get_atlas(config_name)

return PlantConfiguration.hydrate_schema(

model.atlas_obj,

model.is_template

)

def _get_atlas(self, config_name: str):

"""Helper to get atlas model by name"""

model = self._uow.repo.load(config_name)

if model is None:

raise ConfigNotFound(f"Configuration '{config_name}' not found")

return model

Flow of Control

- Request Arrives

→ FastAPI creates aRequestobject. - Dependency Injection

→ FastAPI calls get_config(request) to fetch the config.

→ Callsget_uow()to get a database session. - Execute Route Logic

→ Uses the injecteduowandconfigto process the request